Are you struggling to choose between local, container, and cloud storage for your self-hosted application? Discover the best storage solution for your self-hosted application in this comprehensive guide to local, container, and cloud storage.

Introduction to Modern Cloud Application

This article covers a storage setup from the point of view of a cloud/container application.

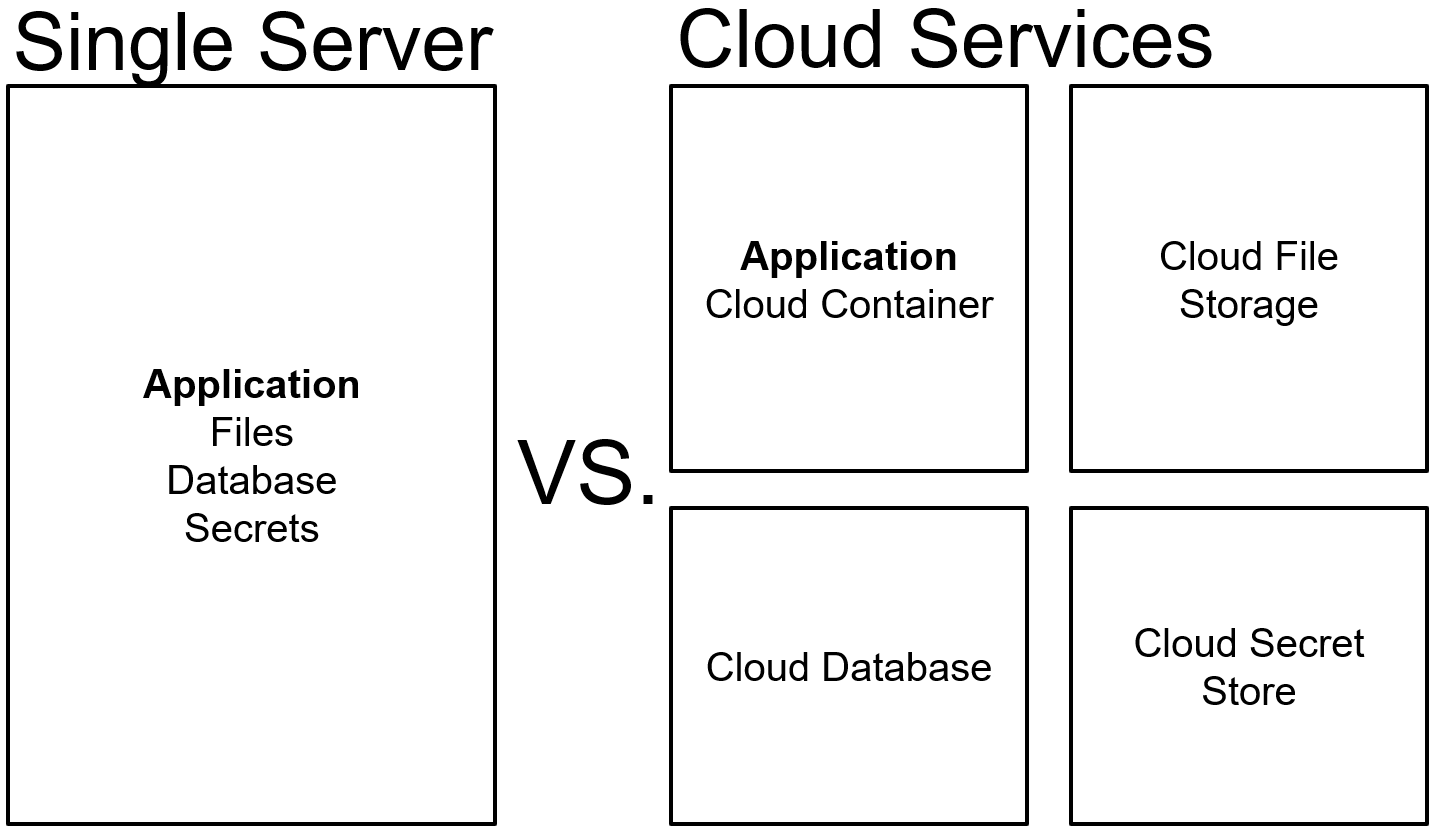

Before the cloud became popular, applications had been used to store files on local machine storages, which including a couple of downsides. The biggest downside is that an application is highly reliant on one single server and disk (note that there are design patterns to mount disks and even share disks between multiple servers). Modern cloud applications are tackling this problem by separating the system into multiple independent services. This means an application container with the correct configuration can be started from almost any machine/service, geographical region or service provider.

Example with Django

To have a real example the setup of the application should look like:

- 1 Virtual Machine with Docker installed (Local Storage)

- 1 Docker Container running a Django Application providing Frontend and Backend (Container Storage)

- 1 Azure Storage Account or AWS S3 Bucket (Cloud Storage)

- 1 PostgreSQL Database Service (not relevant in this scenario)

With Django it is easy to connect to different storages. There are many step-by-step guides how to get django-storages running like https://dev.to/browniebroke/static-vs-media-files-in-django-2k1l.

The Dockerfile contains the following part adding `/opt` directories and giving a non-privileged user owner rights to them:

# add opt directories

ARG PROJECT_DIR=/opt/project

ARG MEDIA_ROOT=/opt/media

RUN mkdir $PROJECT_DIR $MEDIA_ROOT && \

chown -R dockeruser:dockeruser $PROJECT_DIR $MEDIA_ROOT The Django settings file `settings.py` contains the following:

MEDIA_ROOT = '/'

TEMP_ROOT = '/opt/media/tmp'`MEDIA_ROOT` is the path to so called media files, files that are uploaded by users or generated by the application dynamically. Important to note is that these files need to be available in the long run - basically we can say `forever`. An example is a user uploaded picture of a blog post like https://blogbeat.app/blog/maxonce/article/docker-in-production-checklist.

`TEMP_ROOT` is the path to media files, that are uploaded by users or generated by the application but only needed for a very short amount of time e.g. 30 seconds. An example is a download of a PDF that is generated by the application and immediately send to the user by HTTPS.

Local Storage

A local storage is a storage on a machine like a server running production containers. Let's keep it short: Following the idea of a modern cloud application, being independent from the location the container is running, local storage should not be used by the application to store files.

Container Storage: How to Use it Effectively

A container storage is a storage inside an application container. If you are not familiar with containers read more about Docker. Docker has three different possibilities when it comes to storage:

- Mount a Docker volume: If we start a new container, all external files mounted from the Docker volume will be available

- Mount a local volume: If we start a new container, all external files mounted from the local volume will be available

- Mount no volume: If we start a new container, no external files (only files from the Docker image) will be available

Following our goal to build a modern application using a Docker volume or a local volume is similar to just using the local storage, ending in creating an unwanted dependency on the local storage. So does it make sense to think about container volumes if we are not mounting any volumes?

In our Django example a VM with Docker installed handles our started containers. Docker uses storage drivers to create its own storage (basically Docker will manage the local storage for us - and we will only get in contact with Dockers container storage). This will also happen if no Docker or local volume has been mounted. The important thing here is that every stop and start of a container will delete all files that has been created while running the application. This can be helpful to store temporary files - e.g. a PDF that is generated by the application and immediately send to the user. Therefore with every new start of an application container we start from a clean state by just making use of the default behaviour of Docker containers.

In the Dockerfile we created a folder called `/opt/media` including correct permissions. To standardize the use of temporary files we are using a settings variable called `TEMP_ROOT` leading to a directory storing temporary files. The `settings.py` includes:

TEMP_ROOT = '/opt/media/tmp'Use it in Django like `settings.TEMP_ROOT`. Make sure to create the folder if it does not exist.

from django.conf import settings

import os

temp_file = os.path.join(settings.TEMP_ROOT, 'temp.csv')

Cloud Storage: Benefits and Implementation

A cloud storage is a storage service provided by a cloud provider and is usually highly scalable. Cloud provider provide ready-to-use APIs to connect to their storage services.

Modern cloud applications will handle media files through an api connected to a cloud storage like AWS S3 bucket or Microsoft Azure storage accounts. How does this work in our Django app example?

`settings.py`

# MEDIA_ROOT will be the root path of the storage container

MEDIA_ROOT = '/'

# config for your azure storage account

AZURE_STORAGE_ACCOUNT_NAME = ****

AZURE_STORAGE_SAS_TOKEN = ****

AZURE_STORAGE_CONTAINER = ****According to the django-storages package (https://django-storages.readthedocs.io/en/latest/) we are setting up a `storage.py`. In this scenario:

from storages.backends.azure_storage import AzureStorage

from django.conf import settings

class AppStorage(AzureStorage):

account_name = settings.AZURE_STORAGE_ACCOUNT_NAME

AZURE_TOKEN_CREDENTIAL = settings.AZURE_STORAGE_SAS_TOKEN

azure_container = settings.AZURE_STORAGE_CONTAINER

overwrite_files = True

def get_storage():

"""

globals() returns the dictionary of current global symbol table,

which stores all information related to the global scope of

the program.

Depending on environment, this function will return the Storage Class

"""

module, class_name = settings.STORAGE_CLASS.rsplit('.', 1)

return globals()[class_name]()

storage = get_storage()

Running a `pytest` using the cloud storage:

import pytest

from app.storage import storage

from django.conf import settings

@pytest.mark.django_db

def test_storage():

file_path = os.path.join(settings.TEMP_ROOT, 'test_file.txt')

text = 'test'

with storage.open(file_path, mode='w') as f:

f.write(text)

assert storage.open('test_file.txt', mode='r').read().decode('UTF-8') == text

A little trick will serve media files directly from the cloud storage. First setup a function called `media` in `views.py`:

from django.conf import settings

import requests

from django.http import HttpResponse

def media(request, **kwargs):

path = kwargs['resource']

# get blob url

url = f'https://{settings.AZURE_STORAGE_ACCOUNT_NAME}.blob.core.windows.net/{settings.AZURE_STORAGE_CONTAINER}/{path}?{AZURE_STORAGE_SAS_TOKEN}'

blob_request = requests.get(url)

# important: get content_type dynamically

response = HttpResponse(content=blob_request.content, content_type=blob_request.headers['Content-Type'])

return responseNow add a path to your `urls.py`:

from django.urls import path

from app import views

urlpatterns = [

path('media/<path:resource>', views.media, name='media')

]Voilà, calling your host and add the path `/media/<name of the file you want to download>` will now directly request the file from the cloud storage.

Important: Make sure no secret files or other valuable data is stored in the cloud storage.

Summary

To create an efficient and modern storage setup for your cloud/container application, you can follow these guidelines:

- Use cloud storage, such as AWS S3 bucket or Microsoft Azure Storage Account, for storing files that need to be retained indefinitely.

- Utilize container storage for temporary file storage, taking advantage of Docker's default behavior to start from a clean state with each new container instance.

- Reserve local storage primarily for deployment processes, avoiding the use of it for storing application files to maintain location independence.